anthropic says some claude models can now — Anthropic has announced that its latest AI models, known as Claude, now possess the ability to terminate harmful or abusive conversations..

Anthropic Says Some Claude Models Can Now

Anthropic has announced that its latest AI models, known as Claude, now possess the ability to terminate harmful or abusive conversations.

Introduction to Anthropic’s Claude Models

Anthropic, an AI safety and research company founded in 2020 by former OpenAI employees, has been making strides in developing AI systems that prioritize ethical considerations and user safety. The company’s latest announcement highlights an important advancement in its Claude series of AI models, which are designed to engage in human-like conversations while ensuring a safe interaction environment.

New Capabilities of Claude Models

According to Anthropic, the newest iterations of Claude have been equipped with enhanced capabilities that allow them to identify and terminate conversations deemed harmful or abusive. This feature is particularly significant as it addresses a growing concern regarding the misuse of AI technologies in various contexts, including online interactions and customer service.

These models are not only designed to understand the nuances of human language but also to recognize when a conversation veers into abusive territory. By implementing this capability, Anthropic aims to create a safer space for users, especially in environments where AI interactions may be more susceptible to negative behaviors.

Understanding Harmful and Abusive Conversations

Harmful or abusive conversations can manifest in various forms, including:

- Verbal harassment

- Threats of violence

- Hate speech

- Manipulative or deceptive communication

AI systems often face challenges when distinguishing between benign and harmful interactions, making the development of this capability a noteworthy achievement for Anthropic. The ability to recognize and respond to such conversations not only enhances user experience but also aligns with ethical AI practices that prioritize user safety.

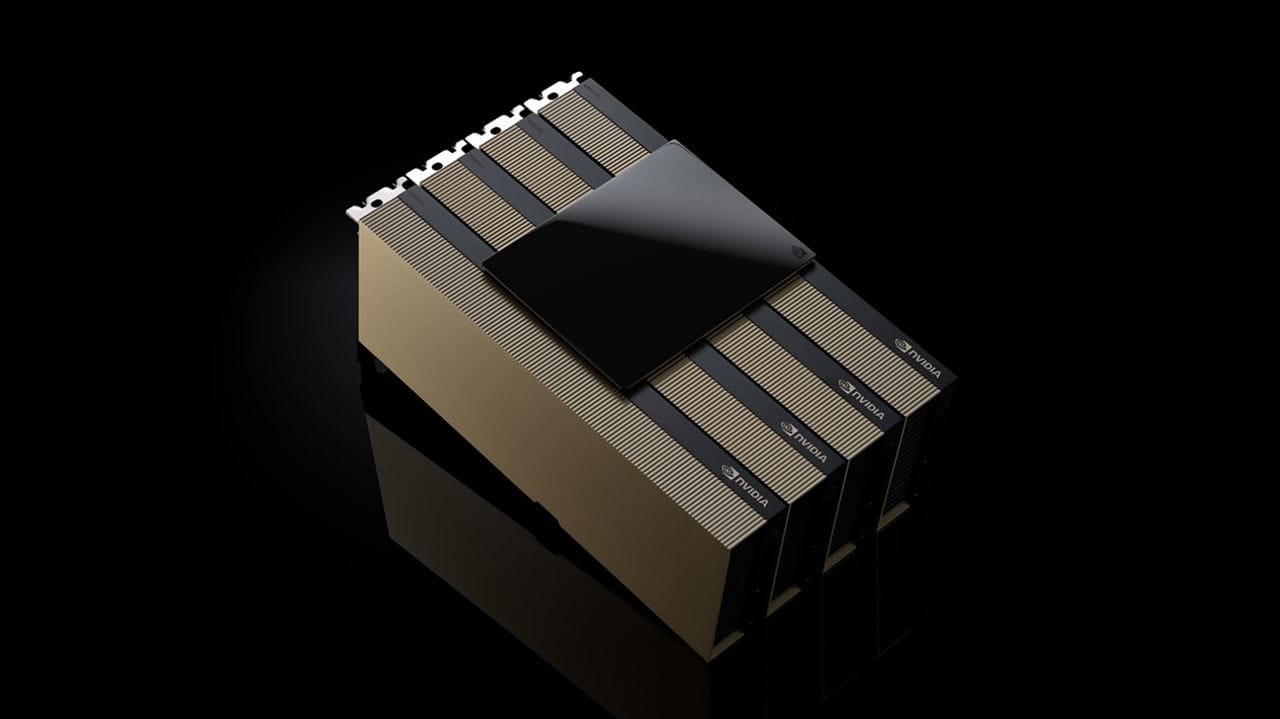

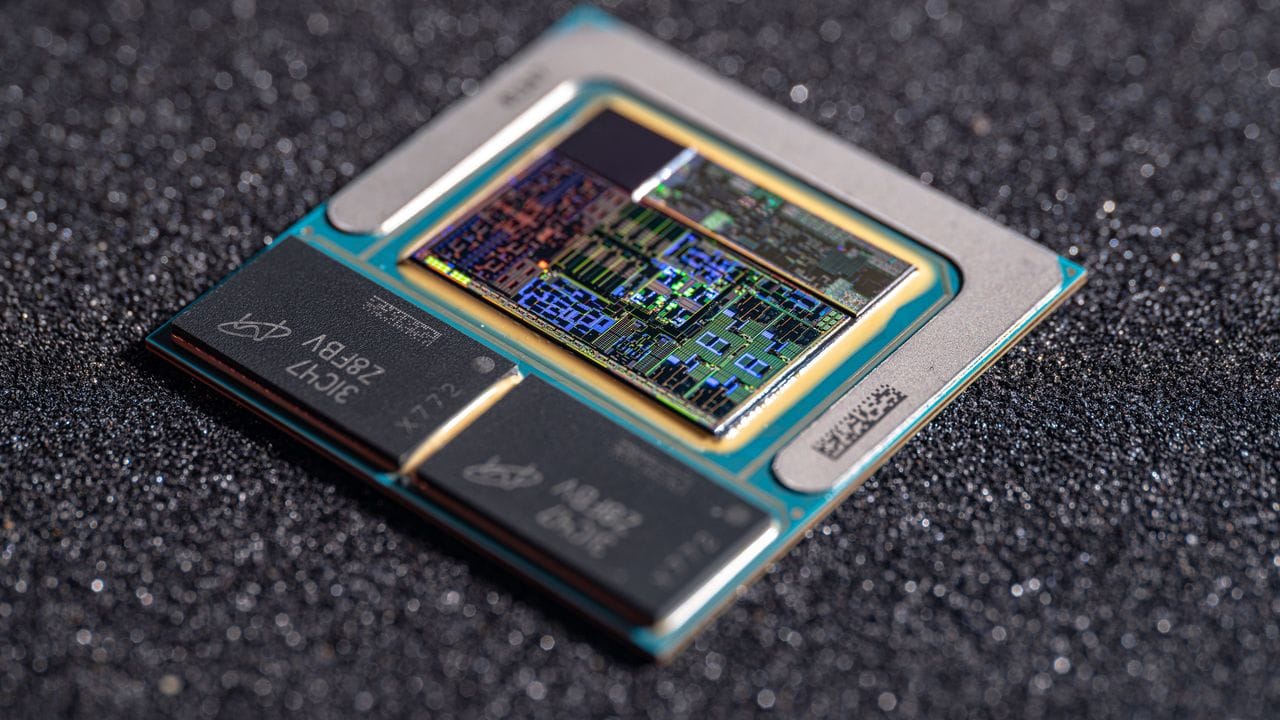

The Technology Behind Claude Models

The Claude models utilize advanced natural language processing (NLP) techniques, which allow them to analyze text and understand context effectively. This technology is grounded in machine learning algorithms that have been trained on vast datasets, enabling the models to learn from diverse conversational patterns.

Anthropic’s commitment to safety and ethical AI is reflected in its design philosophy, which emphasizes transparency and accountability. By incorporating safety measures directly into the model’s architecture, the company aims to mitigate the risks associated with AI misuse.

Stakeholder Impact and Implications

The introduction of these new capabilities has significant implications for various stakeholders, including:

- Businesses: Companies using AI for customer service can benefit from reduced instances of abusive interactions, leading to a more positive customer experience.

- Users: Individuals engaging with AI systems can feel safer knowing that harmful interactions can be promptly addressed.

- Regulatory Bodies: As governments and organizations increasingly scrutinize AI technologies, the implementation of safety features could help companies comply with emerging regulations.

Challenges Ahead

While the advancements made by Anthropic are commendable, challenges remain in the realm of AI safety. The complexity of human language means that no model is perfect, and there may be instances where the AI misinterprets a benign conversation as harmful. This could lead to unnecessary terminations of conversations, potentially frustrating users.

Moreover, the ethical implications of AI decision-making in sensitive contexts raise questions about accountability. As AI continues to evolve, the need for robust oversight mechanisms becomes increasingly crucial to ensure that these systems operate within ethical boundaries.

Future Developments in AI Safety

Anthropic’s efforts represent a broader trend in the AI industry towards prioritizing safety and ethical considerations. Companies are increasingly recognizing the importance of developing technologies that not only perform tasks efficiently but also respect user rights and promote safe interactions.

As AI continues to integrate into our daily lives, the focus on safety features will likely intensify. Future developments may include:

- Enhanced Contextual Understanding: Ongoing improvements in NLP could lead to better recognition of context, allowing AI to make more informed decisions about conversation termination.

- User Feedback Mechanisms: Implementing systems for users to provide feedback on AI interactions could help refine model responses and improve safety features.

- Collaborative Efforts: Collaboration between tech companies, regulatory bodies, and ethicists could foster the development of industry-wide standards for AI safety.

Conclusion

Anthropic’s announcement regarding the Claude models’ ability to end harmful or abusive conversations marks a significant step forward in the quest for safer AI interactions. By focusing on ethical considerations and user safety, the company sets a precedent for the future of AI technology.

As the landscape of AI continues to evolve, the integration of safety features will play a vital role in shaping user experiences and fostering trust in AI systems. The ongoing dialogue about the ethical implications of AI will be critical as we navigate the complexities of human-AI interactions in the years to come.

Source: Original reporting

Further reading: related insights.

Further reading: related insights.

Was this helpful?

Last Modified: August 17, 2025 at 2:13 pm

5 views