can today s ai video models accurately Recent advancements in AI have sparked significant interest in generative video models, particularly their potential to understand and represent the physical properties of the real world.

can today s ai video models accurately

Emergence of Generative Video Models

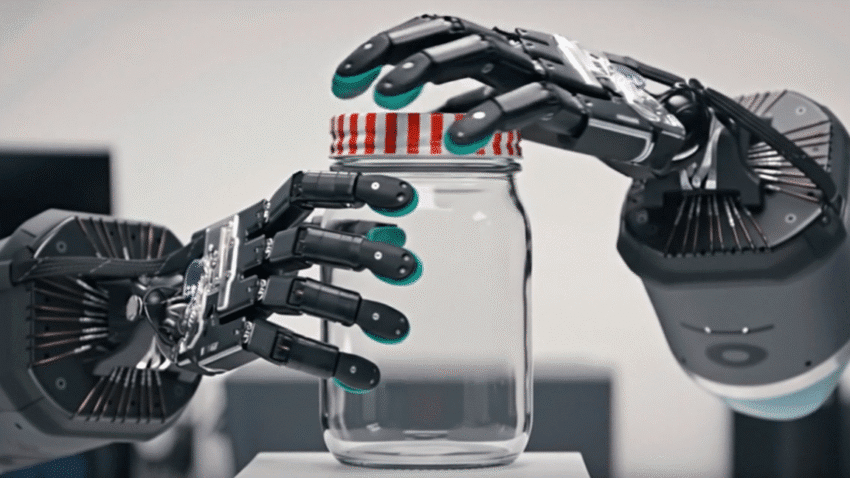

In recent months, the AI community has witnessed a surge in enthusiasm surrounding generative video models. These models are designed to create video content by learning from vast datasets, and they have shown promising capabilities in simulating real-world scenarios. The concept of a “world model” has emerged, which refers to an AI’s ability to understand and predict the dynamics of the physical environment. This capability could represent a substantial leap forward in generative AI, enabling applications that require a nuanced understanding of the world.

The idea of a world model is not entirely new; it has roots in cognitive science and robotics. However, the recent advancements in AI technology have made it increasingly feasible for machines to develop such models. The implications of this are profound, as it could lead to AI systems that not only generate content but also interact with the world in a more meaningful way.

DeepMind’s Research Initiative

To explore the capabilities of generative video models, Google’s DeepMind Research has undertaken a rigorous investigation. Their recent paper, titled “Video Models are Zero-shot Learners and Reasoners,” aims to assess how effectively video models can learn about the real world from their training data. This research is particularly significant as it seeks to establish a scientific foundation for understanding the limitations and potential of these models.

DeepMind utilized its Veo 3 model to generate thousands of videos, which were then employed to evaluate the model’s performance across a variety of tasks. These tasks were designed to test the model’s ability to perceive, model, manipulate, and reason about real-world scenarios. The researchers aimed to determine whether Veo 3 could handle tasks it had not been explicitly trained for, a concept known as “zero-shot learning.”

Zero-shot Learning Explained

Zero-shot learning is a critical aspect of AI development, particularly in the context of generative models. It refers to the ability of a model to generalize its knowledge to new tasks or scenarios without having received specific training for those tasks. In the case of Veo 3, the researchers claim that the model can solve a broad variety of tasks it wasn’t explicitly trained for, suggesting a level of adaptability that could be transformative for generative AI.

However, the concept of zero-shot learning raises important questions about the reliability and consistency of the results produced by these models. While the ability to generalize is a significant milestone, it is essential to scrutinize the extent to which these models can accurately represent the complexities of the real world.

Results and Findings

In their findings, the DeepMind researchers assert that video models are on a trajectory to become unified, generalist vision foundation models. This claim suggests that as these models evolve, they could serve as foundational systems for a wide range of applications, from autonomous vehicles to virtual reality environments.

However, a closer examination of the results reveals that the researchers may be grading today’s video models on a curve. The experiments conducted with Veo 3 yielded a mix of successes and failures, indicating that while the model shows promise, it is not yet fully capable of consistently understanding and interacting with the real world. The researchers acknowledge that many of the results are highly inconsistent, which raises concerns about the reliability of the model’s capabilities.

Implications for Future Research

The findings from DeepMind’s research have significant implications for the future of AI development. As generative video models continue to evolve, it will be crucial for researchers to address the inconsistencies observed in current models. This may involve refining training methodologies, enhancing data quality, and exploring new architectures that can better capture the complexities of real-world interactions.

Moreover, the pursuit of a robust world model could lead to breakthroughs in various fields, including robotics, gaming, and simulation. By enabling AI systems to understand and predict real-world dynamics, researchers could unlock new possibilities for automation and intelligent decision-making.

Stakeholder Reactions

The research conducted by DeepMind has garnered attention from various stakeholders within the AI community. Industry experts and researchers have expressed both excitement and caution regarding the potential of generative video models. Many are optimistic about the advancements made thus far, viewing them as a stepping stone toward more sophisticated AI systems.

However, there are also concerns about the ethical implications of deploying AI systems that can generate realistic video content. The ability to create convincing fake videos raises questions about misinformation, deepfakes, and the potential for misuse in various contexts. As such, stakeholders are advocating for responsible AI development practices that prioritize transparency and accountability.

Ethical Considerations

The ethical considerations surrounding generative video models are multifaceted. On one hand, these technologies have the potential to revolutionize industries by enabling new forms of content creation and interaction. On the other hand, the risks associated with their misuse cannot be overlooked. The ability to generate realistic videos could be exploited for malicious purposes, such as spreading false information or creating deceptive content.

As a result, researchers and industry leaders are calling for the establishment of guidelines and frameworks to govern the development and deployment of generative AI technologies. This includes promoting transparency in AI systems, ensuring that users are aware of when they are interacting with AI-generated content, and implementing safeguards to prevent misuse.

Conclusion

The exploration of generative video models, particularly through the lens of DeepMind’s research, highlights the exciting potential and inherent challenges of this technology. While models like Veo 3 demonstrate the ability to learn and reason about the real world, the inconsistencies in their performance underscore the need for continued research and refinement.

As the field of generative AI evolves, it will be essential for researchers, industry leaders, and policymakers to collaborate in addressing the ethical implications and ensuring that these technologies are developed responsibly. The journey toward creating AI systems that can accurately model the complexities of the real world is ongoing, but the advancements made thus far provide a glimpse into a future where AI could play a transformative role in our understanding and interaction with the world around us.

Source: Original report

Was this helpful?

Last Modified: October 1, 2025 at 11:37 pm

0 views